|

I am a Ph.D. student at Carnegie Mellon University starting from 2023, advised by Prof. Beidi Chen and Prof. Zhihao Jia. Prior to this, I pursued my undergraduate studies in Automation at Tsinghua University, where I had the privilege of working alongside Prof. Jidong Zhai. I also gained research experience under the guidance of Prof. Xuehai Qian at Purdue University. |

|

|

My research interests lie in machine learning and computer systems, with the goal of enabling the applications of advanced machine learning in various scenarios. Currently, I am working on the efficient inference of language models. |

|

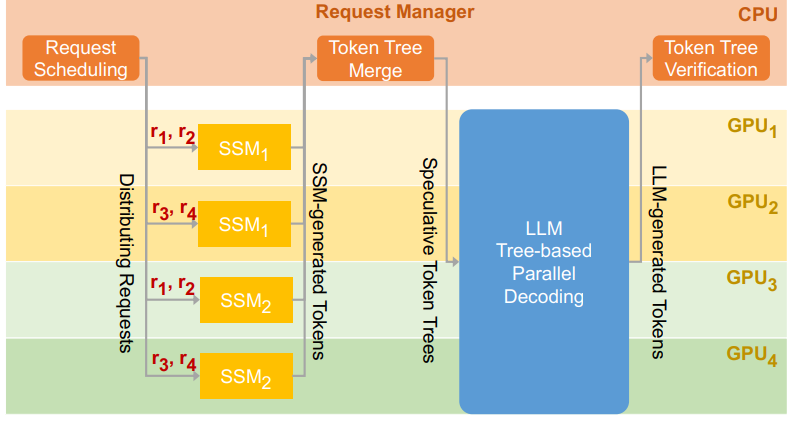

Zhuoming Chen, Avner May, Ruslan Svirschevski, Yuhsun Huang, Max Ryabinin, Zhihao Jia, Beidi Chen Scalable tree-based speculative decoding. |

|

Xupeng Miao, Gabriele Oliaro, Zhihao Zhang, Xinhao Cheng, Zeyu Wang, Zhengxin Zhang, Rae Ying Yee Wong, Alan Zhu, Lijie Yang, Xiaoxiang Shi, Chunan Shi, Zhuoming Chen, Daiyaan Arfeen, Reyna Abhyankar, Zhihao Jia Supports large language models serving with speculative decoding. |

|

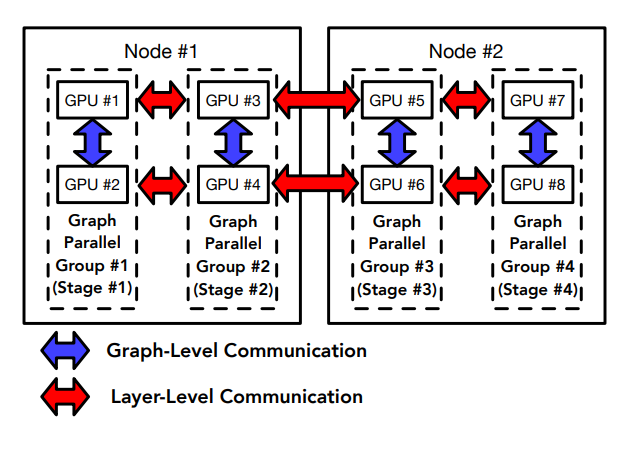

Jingji Chen, Zhuoming Chen, Xuehai Qian, Accelerates GNN training with pipeline model parallslism by reducing communication overhead and improving GPU utlization. |

|

Yu Shi, Guolin Ke, Zhuoming Chen, Shuxin Zheng, Tie-Yan Liu Advances in Neural Information Processing Systems 35 (NeurIPS 2022) Accelerates GBDT training throw low-bit quantization (implemented in LightGBM). |

|

last update: Feb 17, 2024 |

|

Copy from Dr. Jon Barron's page. |